Cloud GPU provider allows companies and businesses to utilize computational power on demand (via Cloud Graphical Units) without investing in high-performance computing infrastructure.

Companies use cloud GPU providers to carry out different AI workloads, including:

- Data Processing Workloads: For Cleaning, handling, and preparing data for model learning.

- Machine Learning Workloads: For ML algorithms’ training, development, and deployment.

- Natural Language Processing (NLP): For algorithms that teach machines to understand, generate, and interpret human language.

- Deep Learning Workloads: For training and deploying neural networks.

- Generative AI: For training Gen AI models like Large Language Models (LLMs), which can create new content, including images, text, and videos.

- Computer Vision: For training machines to interpret visual data for decision-making.

By shifting AI workloads to cloud GPU providers, businesses get the following benefits:

- Enhanced decision-making backed by data.

- Improved efficiency with automation of repetitive tasks

- Ability to solve complex problems, improve services, and develop new products with advanced AI algorithms.

The Geekflare team has researched and listed the best cloud GPU providers based on factors like GPU model, performance, pricing, scalability, integration, and security.

1. Google Cloud GPUs

Google Cloud GPUs offer high-performance GPUs ideal for computing jobs such as 3D virtualization, generative AI, and high-performance computing (HPC). Businesses can opt from a wide GPU selection, including:

- NVIDIA H100

- L4

- P100

- P4

- V100

- T4

- A100

These are customizable and offer flexible performance with high-performance disk, memory, and processor. Each instance supports 8 GPUs, and you only need to pay for what you use with per-second billing.

Additionally, businesses utilizing Google Cloud GPUs can take advantage of GCP infrastructure, including its networking, storage, and data analytics capabilities.

Google Cloud GPU Pricing

GCP GPUs offer pay-as-you-go pricing as follows:

- NVIDIA T4: Starts at $0.35 per GPU (1 GPU and 16 GB GDDR6).

- NVIDIA P4: Starts at $.60 per GPU (1 GPU and 8 GB GDDR6).

- NVIDIA V100: Starts at $2.48 per GPU (1 GPU and 16 GB HBM2).

- NVIDIA P100: Starts at $1.46 per GPU (1 GPU and 16 GB HBM2).

- NVIDIA T4 Virtual Workstation: Starts at $0.55 per GPU (1 GPU and 16 GB GDDR6).

- NVIDIA P4 Virtual Workstation: Starts at $.80 per GPU (1 GPU and 8 GB GDDR5).

- NVIDIA P100: Starts at $1.66 per GPU (1 GPU and 16 GB HBM2).

You get a cheaper price for longer commitments (1-year and 3-year).

2. Amazon Web Services (AWS)

AWS offers cloud GPUs in collaboration with NVIDIA, offering cost-effective, flexible, and powerful GPU-based solutions.

AWS provides the following solutions:

- NVIDIA AI Enterprises: An end-to-end cloud-native software platform that offers pre-trained models, accelerates data science pipelines, and gives data scientists the tools to develop and deploy production-grade AI apps. It also provides tools for management and orchestration, ensuring security, performance, and reliability.

- EC2 P5 instances: Amazon’s high-performance EC2 P5 instances are also equipped with the choice of G4dn (NVIDIA GPUs) or G4ad (AMD GPUs). Scientists and engineers can use these HPC instances to run compute-intensive problems powered by fast cloud GPU, network performance, high amounts of memory, and fast storage.

- NVIDIA DeepStream and AWS IoT Greengrass: DeepStream allows teams to overcome ML model size and complexity restrictions when working with IoT devices. Moreover, AWS IoT Greengrass can extend AWS cloud services to NVIDIA-based edge devices.

- NVIDIA Omniverse: Provides teams with a computing platform to develop 3D-based workflows and applications with real-world simulation and understanding. It also helps improve collaboration and aspects such as remote monitoring or virtual prototyping.

- Virtual Workstations: AWS Cloud solutions also provide powerful virtual workstations powered by NVIDIA. Teams can use them to carry out complex video editing, 3D modeling, and AI development.

AWS Pricing

AWS offers a free tier where you can try their Cloud GPU services. For an exact quote, contact their sales.

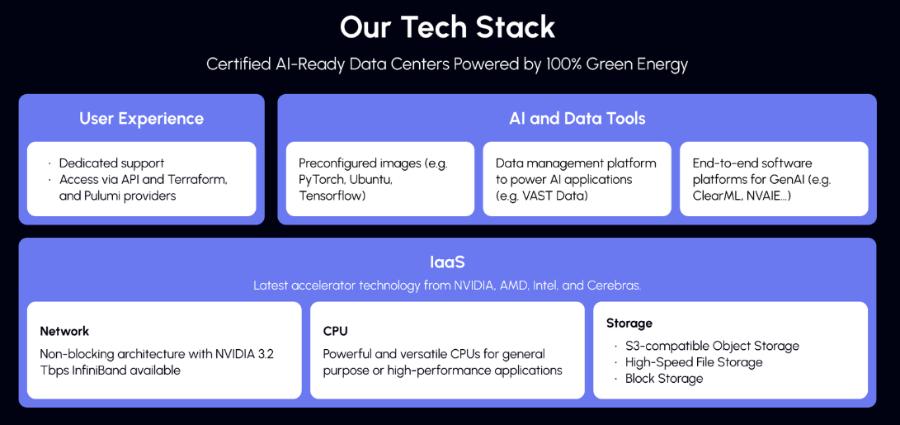

3. Hyperstack

Hyperstack is a cutting-edge GPU-as-a-Service (GPUaaS) platform that allows users to deploy workloads effortlessly in the cloud. Under the hood, Hyperstack utilizes the latest NVIDIA hardware. It is also cheaper compared to public cloud providers such as AWS and Google Cloud.

With Hyperstack, you only pay for what you consume, making it an economical choice for businesses of all sizes. The platform delivers excellent speed and performance through its virtual machines, which are optimized for high-intensity computing tasks.

Hyperstack caters to a wide range of users, from tech enthusiasts and SMEs to large enterprises and managed service providers (MSPs). This scalability is a direct result of Hyperstack’s simplicity and ease of use.

The platform operates on 100% renewable energy while maintaining top-tier performance. As part of NVIDIA’s Inception program, Hyperstack also supports some of the most promising AI startups, providing them with enterprise-grade GPU acceleration. They have data centers in North America and Europe.

This makes Hyperstack an ideal choice for businesses looking to leverage powerful, scalable GPU infrastructure while contributing to a more sustainable future.

Hyperstack Pricing

Hyperstack pricing depends on your GPU model choice. Currently, it offers 9 GPU selections, including the Nvidia H100 SXM 80 GB model, which starts at $2.25/hour.

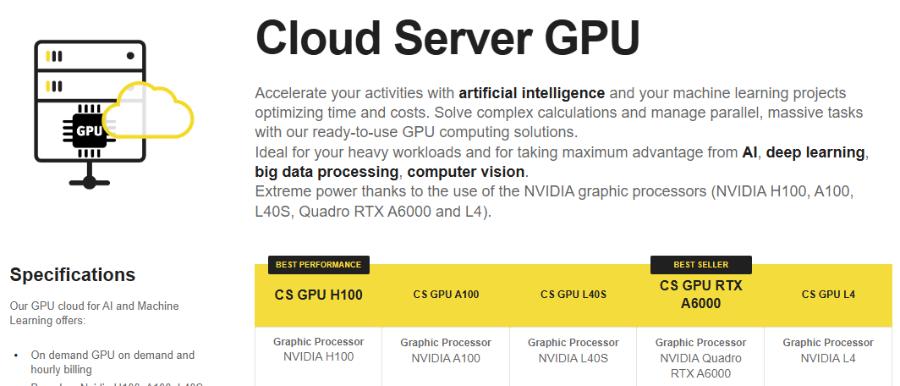

4. Seeweb

Seeweb helps you manage AI and machine learning projects with its arsenal of cloud GPU services. With Seeweb, you can work with multiple use cases, including NLP, VDI, big data, video processing, CUDA applications, genomics, seismic analysis, and more.

Based in Italy, Seeweb has data centers across the European Union for GDPR-complaint data privacy and minimum latency. It also offers superior performance with its dedicated hardware, while keeping the costs economical with its hourly and on-demand billing.

It boasts a ready-to-use tech stack that is based on powerful graphics cards, including NVIDIA A100, Quadro RTX A6000, RTX6000, A30 (MIG), and L4.

Seeweb’s IaC (Terraform) support allows for seamless infrastructure management by letting you define and control your GPU resources through code. Likewise, the platform integrates with Kubernetes for streamlined container orchestration to handle varying workloads effectively.

Moreover, Seeweb uses renewable energy resources and is committed to environmental sustainability with its DNSH and ISO 14001 certifications.

For security and privacy, Seeweb cloud infrastructure is ISO/IEC 27001, 27017, 27018, and 27701 certified, making it ideal for hosting sensitive health, finance, and other business data.

The platform provides a 99.99% uptime guarantee SLA, constant monitoring, 24/7/365 technical support, and protection against internet threats like DDoS attacks.

Seeweb Pricing

Seeweb offers the following pricing:

- Cloud Server GPU: Access to multiple GPUs (CS GPU L4, CS GPU L40S, etc.) with pricing starting at €0.38/hour (€279/month).

- Serverless GPU: Access to multiple GPUs (H100, A100, etc.) with prices starting at €0.42/hour.

- Cloud Server NPU: Access to CS NPU1 and CS NPU 2 with prices starting at €0.07/hour.

5. IBM Cloud

IBM Cloud offers flexibility, power, and GPU options powered by NVIDIA. It provides seamless integration with the IBM Cloud architecture, applications, and APIs, along with a distributed network of data centers globally.

The platform uses NVIDIA GPUs to carry out tasks, including traditional AI, HPC, and Generative AI. With IBM Cloud, you can train, fine-tune, and perform inference using its optimized infrastructure for AI workflow. IBM’s AI infrastructure gives access to many GPUs, including NVIDIA L4, L40S, and Tesla V100, with 64 to 320 GB RAM and bandwidth running from 16 Gbps to 128 Gbps.

IBM Cloud offers a Gen AI deployment hybrid design stack with access to AI assistants, AI Platform, Data Platform, Hybrid Cloud Platform, and GPU infrastructure.

IBM Cloud Pricing

IBM Cloud offers a handy tool for calculating pricing. Its GVP V100 cheapest price is $3.024/hour, where you get access to 8 vCPUs, 64 GB RAM, and 16 Gbps bandwidth.

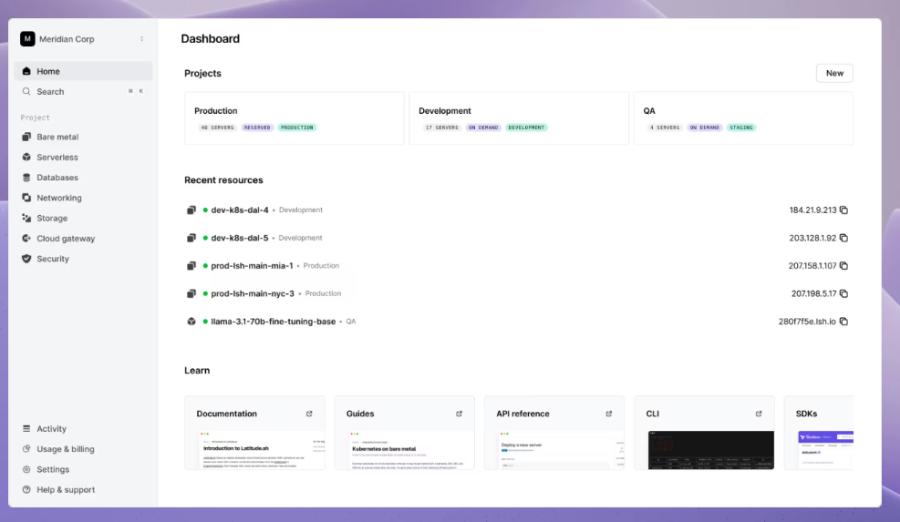

6. Latitude.sh

Latitude.sh is specifically designed to supercharge AI and machine learning workloads. Powered by NVIDIA’s H100 GPUs, Latitude.sh’s infrastructure offers up to 2x faster model training compared to competing GPUs like the A100.

Opting for Latitude.sh means you can deploy high-performance dedicated servers across 18+ global locations (such as Sydney, Frankfurt, Tokyo, and others), ensuring minimum latency and optimal performance.

Each instance is optimized for AI workloads and comes pre-installed with deep learning tools like TensorFlow, PyTorch, and Jupyter.

Latitude.sh’s API-first approach simplifies automation, making it effortless to integrate with tools like Terraform. Latitude.sh’s intuitive dashboard allows you to create views, manage projects, and add resources in just a few clicks.

Latitude.sh Pricing

Latitude.sh cloud GPUs’ pricing starts at $3/hour, and you get access to 1 x NVIDIA H100 80 GB, 2 x 3.7 TB NVME, and 2 x 10 Gbps network.

7. Genesis Cloud

Genesis Cloud is a best-in-class AI cloud provider that is affordable, highly reliable, and secure. Its accelerated GPU cloud is also cheap, with prices as low as 80% compared to legacy clouds.

Their Genesis Enterprise AI Cloud offers businesses an end-to-end machine learning platform and advanced data management. They also have access to many efficient data centers worldwide, with whom they are collaborating to offer a wide range of applications.

Genesis Cloud offers extensive GPU options, such as the HGX H100 and the upcoming NVIDIA Blackwell architecture GPUs, including B200, GB200, and GB200 NVL72.

Additionally, to ensure optimal efficiency, they provide:

- AI-optimized storage solutions without any ingress or egress fees.

- Fast, scalable, and elastic networks for AI with multi-node support.

- Tier 3 data centers with a 99.9% uptime guarantee.

- Better privacy and security with ISO270001 certification.

- 100% green energy and low PUE to protect the environment and save business costs.

Genesis Cloud Pricing

Genesis Cloud’s price is as follows.

| Plan | Hourly Price | Offerings |

|---|---|---|

| Inference Workload | Starts at $0.20/h | Option to choose from a variety of GPUs. |

| NVIDIA HGX H100/H200 | Starts at $2.00/h | Ideal for long-term contracts with customized configurations. |

8. Paperspace CORE

Paperspace CORE offers next-gen accelerated computing infrastructure with a fast network, 3D app support, and instant provisional and full API access. It is also easy to use with straightforward interference for onboarding.

With Paperspace CORE, you can build and run AI/ML models using NVIDIA H100 GPUs on DigitalOcean (DigitalOcean acquired Paperspace Core in 2023).

Paperspace CORE’s key features include the following:

- Offers zero-configuration Notebook IDE with collaboration feature.

- Save up to 70% compute costs compared to major public clouds.

- Provides infrastructure abstraction with job scheduling, resource provisioning and more.

- Offers insights to improve the overall process, including permissions, team utilization, and more.

- Powerful management console that lets you perform tasks quickly, such as adding VPN and managing network configurations.

Paperspace CORE Pricing

Paperspace CORE offers Platform and Compute plans. The Platform plans are listed below.

| Plan | Monthly Price | Offerings |

|---|---|---|

| Free | $0 | Ideal for beginners who want to use public projects and basic instances. |

| Pro | $8 | Ideal for ML/AI engineers and researchers with private projects and mid-range instances. |

| Growth | $39 | Ideal for teams, research groups, and startups requiring high storage and high-end instances. |

They also offer on-demand compute, with prices ranging from $0.76/hour (A4000) to $2.24/hour (H100).

9. OVHcloud

OVHcloud provides cloud servers that are designed to process massive parallel workloads. The GPUs have many instances integrated with NVIDIA Tesla V100 graphic processors to meet deep learning and machine learning needs.

They help accelerate graphic computing and artificial intelligence. OVHcloud partners with NVIDIA to offer the best GPU-accelerated platform for high-performance computing, AI, and deep learning.

The platform provides a complete catalog for easy deployment and management of GPU-accelerated containers. Developers can use one of four cards to the instances directly via PCI Passthrough without any virtualization layer to dedicate all the powers to your use.

As for certifications, OVHcloud’s services and infrastructures are ISO/IEC 27017, 27001, 27701, and 27018 certified. The certifications indicate that OVHcloud has an Information Security Management System (ISMS) to manage vulnerabilities, implement business continuity, manage risks, and implement a privacy information management system (PIMS).

Companies with high compute performance can use OVHcloud’s Cloud GPU for:

- Image recognition, classifying data from images.

- Real-time situation analysis, such as self-driving cars and internet network traffic analysis.

- Interactions on how machines communicate with people through sound and video.

- Train and optimize AI models with GPU computing resources.

OVHcloud pricing

There are no set pricing plans for OVHcloud. To get a tailored quote, you’ll have to contact their sales team with your requirements.

Try OVHcloud

10. RunPod

RunPod is an all-in-one cloud that lets engineers easily develop, train, and scale AI models. It offers a globally distributed GPU cloud across 30 regions, giving you the necessary infrastructure to train, build, and deploy ML models.

With RunPod, you can spin up GPU pods in seconds and start deploying them. Its 50+ templates, such as PyTorch, Docker, and others, give you ready-to-use machines. It also supports custom containers—all through an easy-to-use CLI.

RunPod’s key features include:

- Zero fees for egress/ingress.

- 99.99% uptime guarantee.

- Global interoperability.

- Cheap network storage with 10 PB+ capacity.

- Supports serverless ML inference scaling.

- Get real-time usage analytics for all the endpoints.

- Easily debug endpoints with execution time analytics and real-time logs.

- Provides autoscale in 8+ regions.

- Enterprise-ready with secure infrastructure and world-class compliance.

RunPod Pricing

RunPod offers a pay-per-use model. To use RunPod’s cloud GPUs, you must add credit or automate payments using a credit card. Furthermore, it offers a spending limit. You need to register an account and input your requirements to know the exact cost.

11. Lambda GPU

Lambda GPU Cloud offers NVIDIA GPUs to train deep learning, ML, and AI models and easily scale from a machine to the total number of VMs.

It offers a complete Lambda Stack with all the necessary AI software. These include pre-installed frameworks such as Ubuntu, PyTorch, and NVIDIA CUDA, and the latest version of the Lambda Stack, which includes CUDA drivers and deep learning frameworks. Furthermore, it comes with one-click Jupyter access from the browser.

Lambda GPU’s key features include:

- NVIDIA H100s on demand.

- Comes with 1-click clusters featuring Quantum-2 InfiniBand and NVIDIA H100 Tensor Core GPUs.

- Use SSH directly with one of the SSH keys or connect through the Web Terminal in the cloud dashboard for direct access.

- Supports a maximum of 10 Gbps of inter-node bandwidth, ideal for frameworks that support scattered training, such as Horovod.

- Savings up to 50% on computing with reduced cloud TCO.

Lambda GPU Pricing

Lambda GPU offers on-demand and reserved GPUs. Its on-demand Cloud GPU pricing ranges from $0.50/GPU/hr (NVIDIA Quadro RTX 6000) to $2.99/GPU/hr (8x NVIDIA H100 SXM). For reserved GPUs, you need to contact their sales.

12. TensorDock

TensorDock is an ideal pick for companies looking for affordable GPU servers. It offers on-demand GPU resources at low prices and the option to create the server in 30 seconds.

Businesses can also choose from its wide selection of 45 available GPU models, including the budget RTX 4090 and more premium high-performance computing GPUs such as HGX H100s.

Furthermore, TensorDock supports KVM virtualization with complete root access. It also supports Windows 10, multithreading, and optimized end-to-end VM deployment speed.

TensorDock’s key features include:

- Access to VM templates such as Docker for easy deployment.

- Up to 30,000 GPUs are available across 100+ locations.

- Well-documented API.

- Excellent reliability with 99.99% uptime standards.

- Instant pre-configured VMs, deployable in seconds.

TensorDock Pricing

TensorDock offers Cloud GPUs for deep learning, rendering, and consumer-grade usage. Its pricing ranges from $0.08/hr (GTX 1070) to $2.42/hr (H100 SXM5).

13. Ori

Ori is an AI-native GPU cloud provider that offers cost-effective, customizable, and easy-to-use services. It offers a range of services, including bare metal and serverless Kubernetes.

Ori provides access to popular NVIDIA GPU architectures, including NVIDIA B100, B200, and GB200. The platform has also claimed to bring NVIDIA Blackwell architecture to its ranks in the near future.

Ori Cloud key features include:

- Purpose-build optimized GPU servers, ideal for a wide range of AI/ML applications.

- A broad range of GPU resources available for deployment.

- Offers access to various compute flavors, including serverless Kubernetes to bare metal.

Ori Pricing

Ori GPU cloud pricing ranges from $0.95/hr (NVIDIA V100s) to $3.80/h (NVIDIA H100 SXM). It only offers on-demand plans.

14. Nebius

Nebious lets you build, tune, and run AI models using the top-managed NVIDIA GPU infrastructure, including the latest H100/H200 GPU clusters.

It offers a full-stack AI platform including the following:

- AI Platform and Marketplace: Lists popular MLOps solutions, including Kuberflow, Airflow, Ray, and others. It also includes managed PostgreSQL, Managed MLflow, and Managed Spark.

- AI Cloud: Offers access to scalable clusters with storage, networking, and orchestration solutions, including GPU clusters, managed K8s, Compute, Storage, and more.

- Hardware and Data Center: Access to energy-efficient data center, 3.2 Tbit/s InfiniBand network, and NVIDIA GPUs (H100, H200, L40s).

Furthermore, it lets you manage infrastructure as code using CLI, API, and Terraform. Nebius also provides access to 24/7 expert support.

Nebius Pricing

Nebius’ pricing ranges from $1.18/hr (12-month reserve L40s) to $3.5/hr (6-month reserve H200).

What are the Benefits of Cloud GPU?

There are 5 main benefits of using cloud GPUs, as shown below.

- Improved Data-intensive Tasks: Cloud GPUs give companies (especially for critical real-time data processing industries like healthcare) the ability to tackle data-intensive tasks with improved performance. For example, Cloud GPUs can handle complex big data analytics, machine learning, and AI. This leads to quicker and better decision-making and a competitive edge over competitors.

- Cost Efficiency: Cloud GPU removes the need to build, manage, and maintain GPU infrastructure, ensuring cost efficiency for growing businesses. Most Cloud GPU providers provide pay-as-you-go pricing, further reducing costs. Moreover, companies don’t have to worry about upgrading or increasing energy costs.

- Faster Time to Market: With the use of Cloud GPUs, companies can bring their products/services faster.

- Improved Scalability: Growing businesses can benefit from scalability options as most Cloud GPUs provide horizontal and vertical scaling with the option to scale down when needed.

- Improved Collaboration and Accessibility: Cloud-based GPU computing brings teams together to work on problems without geo-location restrictions.