Serving tech enthusiasts for over 25 years.

TechSpot means tech analysis and advice you can trust.

In context: The first official performance benchmarks for AMD's Instinct MI300X accelerator designed for data center and AI applications have surfaced. Compared to Nvidia's Hopper, the new chip secured mixed results in MLPerf Inference v4.1, an industry-standard benchmarking tool for AI systems with workloads designed to evaluate AI accelerator training and inference performance.

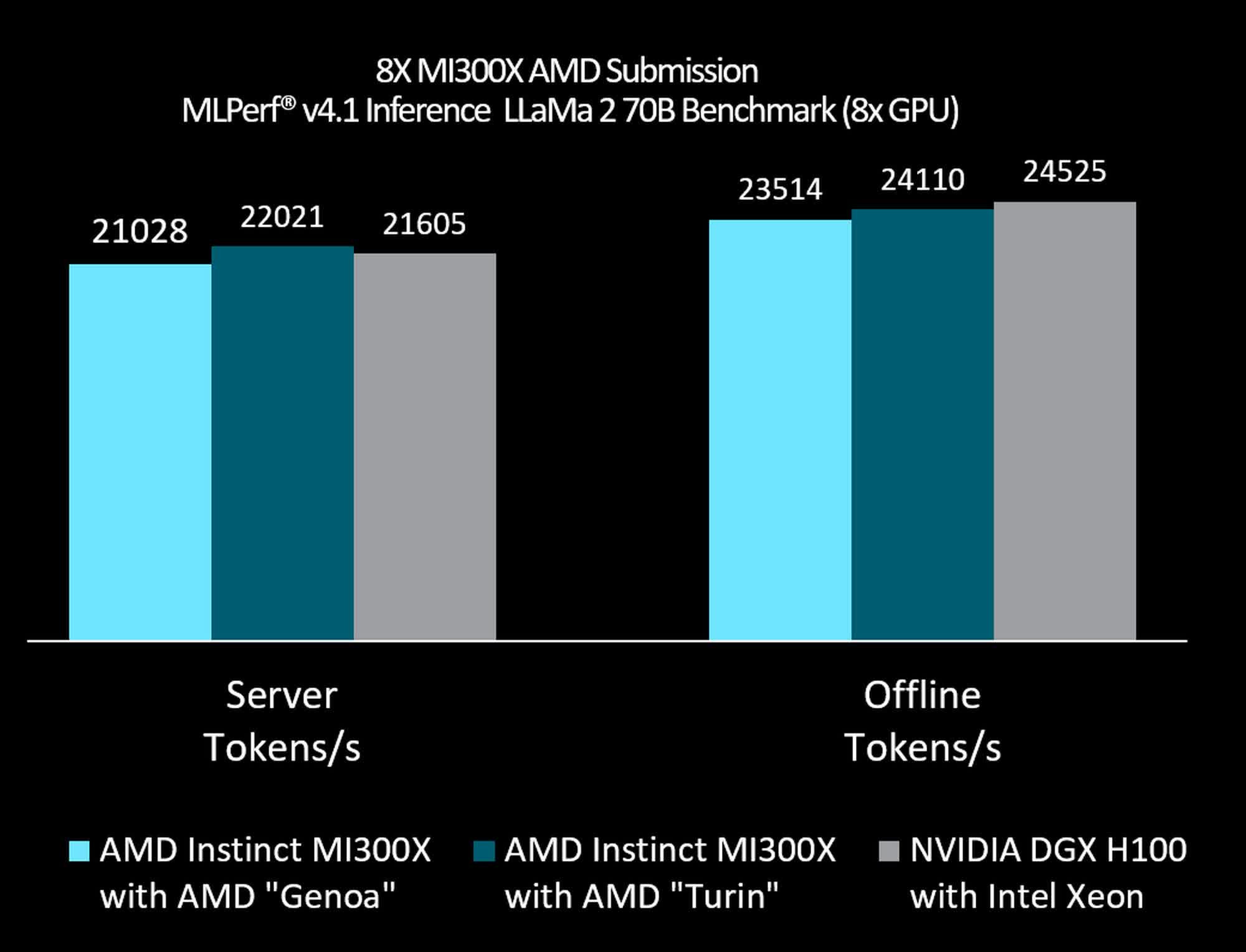

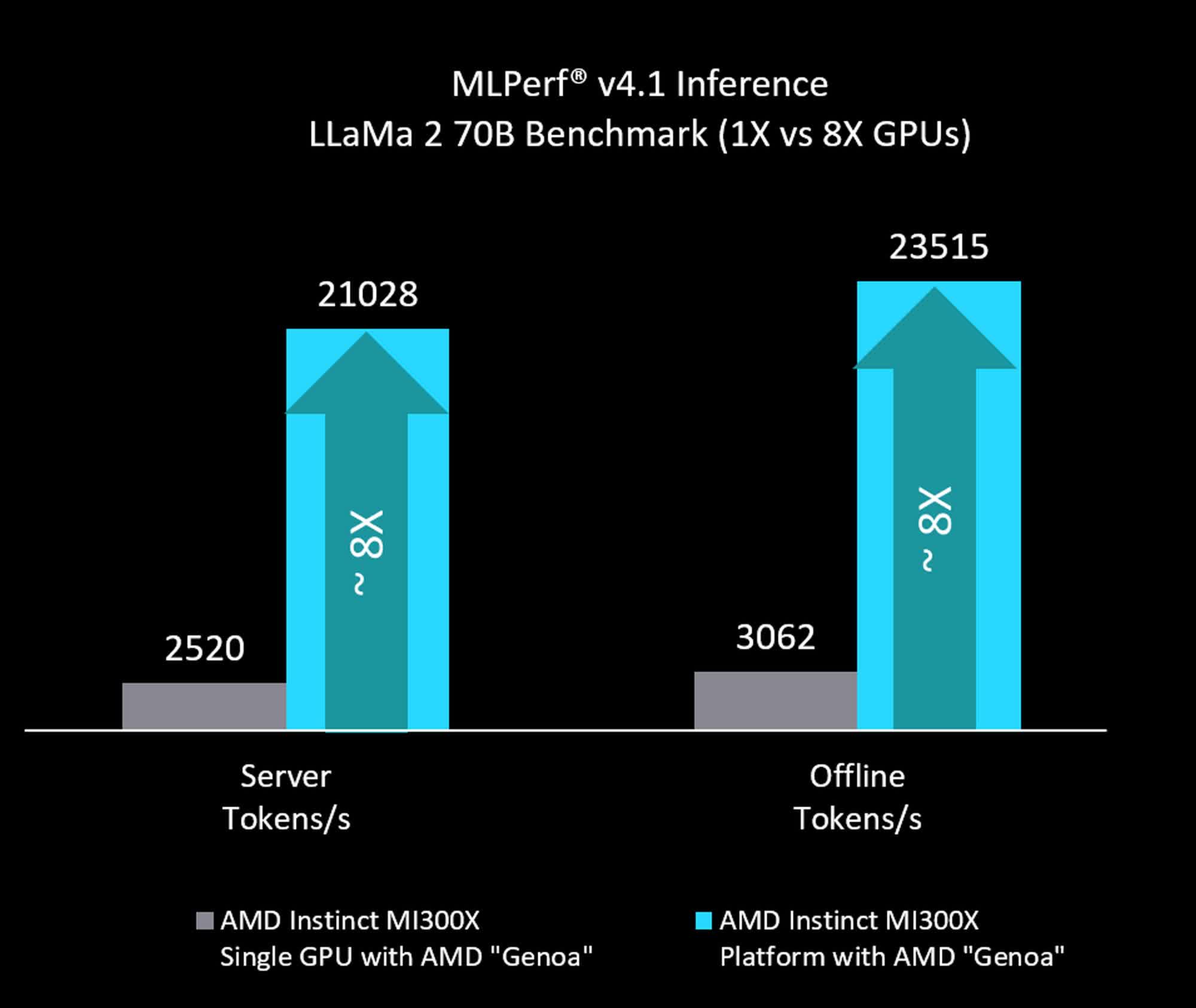

On Wednesday, AMD released benchmarks comparing the performance of its MI300X with Nvidia's H100 GPU to showcase its Gen AI inference capabilities. For the LLama2-70B model, a system with eight Instinct MI300X processors reached a throughput of 21,028 tokens per second in server mode and 23,514 tokens per second in offline mode when paired with an EPYC Genoa CPU. The numbers are slightly lower than those achieved by eight Nvidia H100 accelerators, which hit 21,605 tokens per second in server mode and 24,525 tokens per second in offline mode when paired with an unspecified Intel Xeon processor.

When tested with an EPYC Turin processor, the MI300X fared a little better, reaching a throughput of 22,021 tokens per second in server mode, slightly higher than the H100's score. However, in offline mode, the MI300X still scored lower than the H100 system, reaching only 24,110 tokens per second.

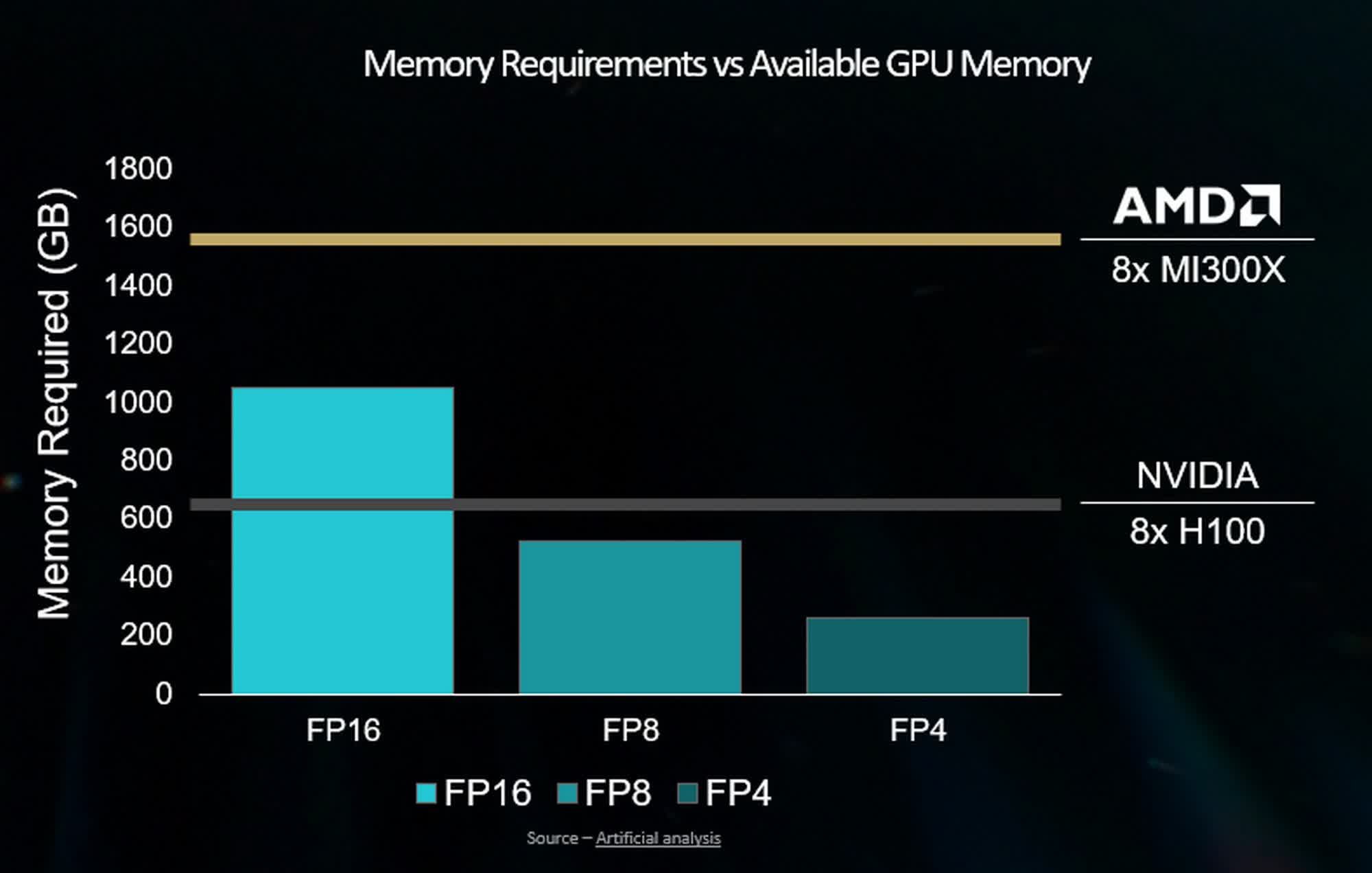

The MI300X supports higher memory capacity than the H100, potentially allowing it to run a 70 billion parameter model like the LLaMA2-70B on a single GPU, thereby avoiding the network overhead associated with model splitting across multiple GPUs at FP8 precision. For reference, each instance of the Instinct MI300X features 192 GB of HBM3 memory and delivers a peak memory bandwidth of 5.3 TB/s. In comparison, the Nvidia H100 supports up to 80GB of HMB3 memory with up to 3.35 TB/s of GPU bandwidth.

The results largely align with Intel's recent claims that its Blackwell and Hopper chips offer massive performance gains over competing solutions, including the AMD Instinct MI300X. Likewise, Nvidia provided data showing that in LLama2 tests, a system with eight MI300X processors reached only 23,515 tokens per second at 750 watts in offline mode. Meanwhile, the H100 achieved 24,525 tokens per second at 700 watts. The numbers for server mode are similar, with the MI300X hitting 21,028 tokens per second, while the H100 scored 21,606 tokes per second at lower wattage.