Google LLC is making a new version of its popular Gemini 1.5 Flash artificial intelligence model available that’s smaller and faster than the original.

It’s called Gemini 1.5 Flash-8B, and it’s much more affordable, at half the price. Gemini 1.5 Flash is the lightweight version of Google’s Gemini family of large language models, optimized for speed and efficiency and designed to be deployed on low-powered devices such as smartphones and sensors.

The company announced Gemini 1.5 Flash at Google I/O 2024 in May, and it was released to some paying customers a few weeks later, before becoming available for free via the Gemini mobile app, albeit with some restrictions on use.

It finally hit general availability at the end of June, offering competitive pricing and a 1 million-token context window combined with high-speed processing. At the time of its launch, Google noted that its input size is 60 times larger than that of OpenAI’s GPT-3.5 Turbo, and 40% faster on average.

The original version was designed to provide a very low token input price, making it price-competitive for developers, and was adopted by customers such as Uber Technologies Inc., powering the Eats AI assistant in that company’s UberEats food delivery service.

With Gemini 1.5 Flash-8B, Google is introducing one of the most affordable lightweight LLMs available on the market, with a 50% lower price and double the rate limits compared with the original 1.5 Flash. In addition, it also offers lower latency on small prompts, the company said.

Developers can access Gemini 1.5 Flash-8B for free via the Gemini API and Google AI Studio.

In a blog post, Gemini API Senior Product Manager Logan Kilpatrick explained that the company has made “considerable progress” in its efforts to improve 1.5 Flash, taken into consideration feedback from developers and “testing the limits” of what’s possible with such lightweight LLMs.

He explained that the company announced an experimental version of Gemini 1.5 Flash-8B last month. It has since been refined further, and is now generally available for production-use.

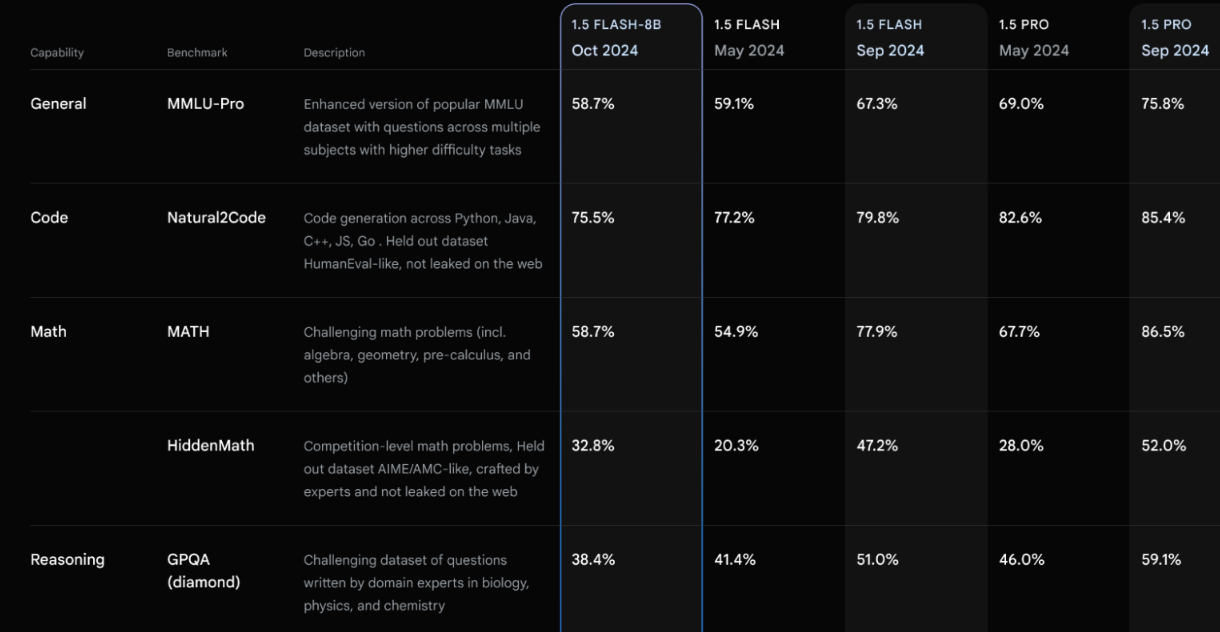

According to Kilpatrick, the 8-B version can almost match the performance of the original 1.5 Flash model on many key benchmarks, and has shown to be especially useful in tasks such as chat, transcription and long context language translation.

“Our release of best-in-class small models continues to be informed by developer feedback and our own testing of what is possible with these models,” Kilpatrick added. “We see the most potential for this model in tasks ranging from high volume multimodal use cases to long context summarization tasks.”

Kilpatrick added that Gemini 1.5 Flash-8B offers the lowest cost per intelligence of any Gemini model released so far:

The pricing compares well with equivalent models from OpenAI and Anthropic PBC. In the case of OpenAI, its cheapest model is still GPT-4o mini, which costs $0.15/1M input, though that drops by 50% for reused prompt prefixes and batched requests. Meanwhile, Anthropic’s most affordable model is Claude 3 Haiku at $0.25/M, though the price drops to $0.03/M for cached tokens.

In addition, Kilpatrick said the company is doubling 1.5 Flash-8B’s rate limits, in an effort to make it more useful for simple, high-volume tasks. As such, developers can now send up to 4,000 requests per minute, he said.