Artificial intelligence startup and MIT spinoff Liquid AI Inc. today launched its first set of generative AI models, and they’re notably different from competing models because they’re built on a fundamentally new architecture.

The new models are being called “Liquid Foundation Models,” or LFMs, and they’re said to deliver impressive performance that’s on a par with, or even superior to, some of the best large language models available today.

The Boston-based startup was founded by a team of researchers from the Massachusetts Institute of Technology, including Ramin Hasani, Mathias Lechner, Alexander Amini and Daniela Rus. They’re said to be pioneers in the concept of “liquid neural networks,” which is a class of AI models that’s quite different from the Generative Pre-trained Transformer-based models we know and love today, such as OpenAI’s GPT series and Google LLC’s Gemini models.

The company’s mission is to create highly capable and efficient general-purpose models that can be used by organizations of all sizes. To do that, it’s building LFM-based AI systems that can work at every scale, from the network edge to enterprise-grade deployments.

What are LFMs?

According to Liquid, its LFMs represent a new generation of AI systems that are designed with both performance and efficiency in mind. They use minimal system memory while delivering exceptional computing power, the company explains.

They’re grounded in dynamical systems, numerical linear algebra and signal processing. That makes them ideal for handling various types of sequential data, including text, audio, images, video and signals.

Liquid AI first made headlines in December when it raised $37.6 million in seed funding. At the time, it explained that its LFMs are based on a newer, Liquid Neural Network architecture that was originally developed at MIT’s Computer Science and Artificial Intelligence Laboratory. LNNs are based on the concept of artificial neurons, or nodes for transforming data.

Whereas traditional deep learning models need thousands of neurons to perform computing tasks, LNNs can achieve the same performance with significantly fewer. It does this by combining those neurons with innovative mathematical formulations, enabling it to do much more with less.

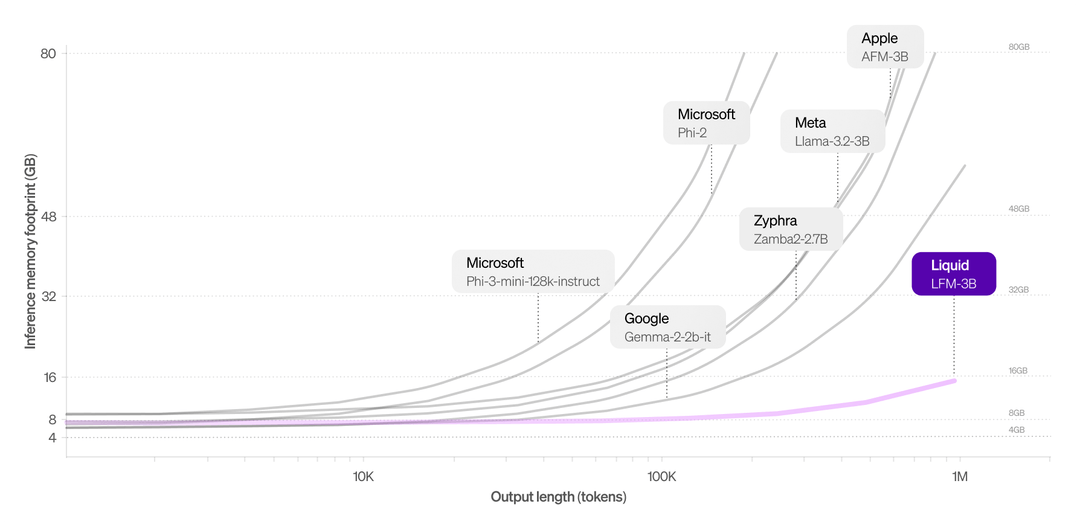

The startup says its LFMs retain this adaptable and efficient capability, which enables them to perform real-time adjustments during inference without the enormous computational overheads associated with traditional LLMs. As a result, they can handle up to 1 million tokens efficiently without any noticeable impact on memory usage.

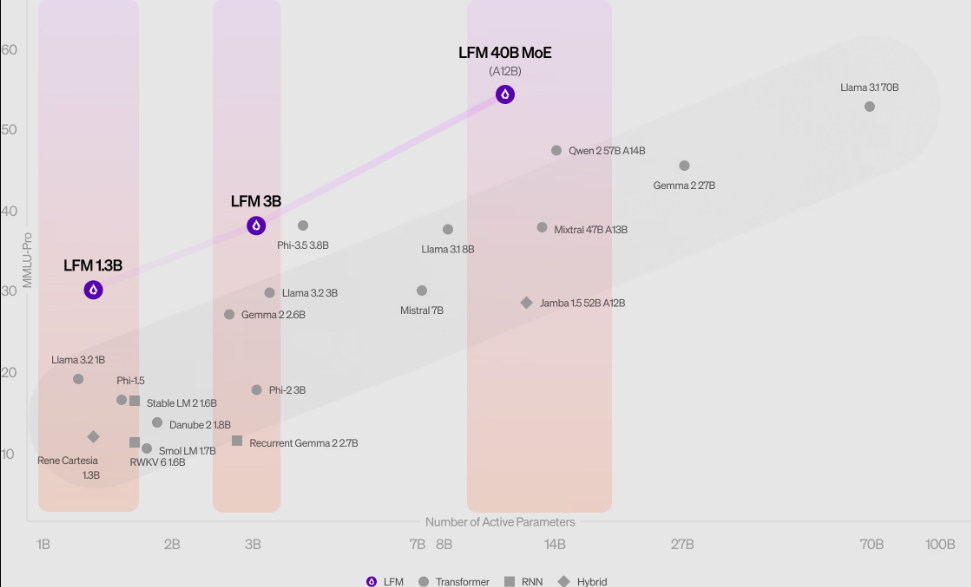

Liquid AI is kicking off with a family of three models at launch, including LFM-1B, which is a dense model with 1.3 billion parameters, designed for resource-constrained environments. Slightly more powerful is LFM-3B, which has 3.1 billion parameters and is aimed at edge deployments, such as mobile applications, robots and drones. Finally, there’s LFM-40B, which is a vastly more powerful ” mixture of experts” model with 40.3 billion parameters, designed to deployed on cloud servers in order to handle the most complex use cases.

The startup reckons its new models have already shown “state-of-the-art results” across a number of important AI benchmarks, and it believes they are shaping up to be formidable competitors to existing generative AI models such as ChatGPT.

Whereas traditional LLMs see a sharp increase in memory usage when performing long-context processing, the LFM-3B model notably maintains a much smaller memory footprint (above) which makes it an excellent choice for applications that require large amounts of sequential data to be processed. Example use cases might include chatbots and document analysis, the company said.

Strong performance on benchmarks

In terms of their performance, the LFMs delivered some impressive results, with LFM-1B outperforming transformer-based models in the same size category. Meanwhile, LFM-3B stands up well against models such as Microsoft Corp.’s Phi-3.5 and Meta Platforms Inc.’s Llama family. As for LFM-40B, its efficiency is such that it can even outperform larger models while maintaining an unmatched balance between performance and efficiency.

Liquid AI said the LFM-1B model put in an especially dominating performance on benchmarks such as MMLU and ARC-C, setting a new standard for 1B-parameter models.

The company is making its models available in early access via platforms such as Liquid Playground, Lambda – via its Chat and application programming interfaces – and Perplexity Labs. That will give organizations a chance to integrate its models into various AI systems and see how they perform in various deployment scenarios, including edge devices and on-premises.

One of the things it’s working on now is optimizing the LFM models to run on specific hardware built by Nvidia Corp., Advanced Micro Devices Inc., Apple Inc., Qualcomm Inc. and Cerebras Computing Inc., so users will be able to squeeze even more performance out of them by the time they reach general availability.

The company says it will release a series of technical blog posts that take a deep dive into the mechanics of each model ahead of their official launch. In addition, it’s encouraging red-teaming, inviting the AI community to test its LFMs to the limit, to see what they can and cannot yet do.