As great as user interviews usually are, they also come with much waste. There’s waste from missing insights from the interviewee when they forget something. There’s waste from gathering repetitive insights. And there’s also waste from simply skipping debrief questions when running interviews.

All that time is wasted because most of us tend to rush things. We jump from one user interview to another, and whenever we capture cool insights, we’d love to act on them as soon as possible.

So, what’s the ideal way to go about user interviews?

Slowing down and taking the time for research debriefs will not only let you benefit from already conducted interviews, but you’ll also be able to spot opportunities to improve future interviews.

Dedicating time to proper interview debriefs is the best way to maximize learning by consciously slowing down. In this blog, I guide you through the process of effectively deriving the most insights and information from user interviews by using debrief questions.

What are debrief questions?

A user interview debrief in research isn’t a specific event or artifact. It’s everything you do between interviews — how you wrap up the previous interview and how you use that information to prepare for the next one.

Debriefs are most effective when done as a team. Proper debriefing in research offers several key benefits:

- Insight capture — While reviewing your interviews, odds are you’ll spot insights that you didn’t notice or note during the interview

- Insight retention — Spending some time diving deeper into the previous interview will help you remember the captured insights for much longer, both through extra personal revision and better documentation

- Team alignment — By asking debrief questions as a team, you have a built-in alignment tool that keeps everyone on the same page

- Bias mitigation — Reviewing and debriefing interviews as a team helps avoid biases that any particular individual could fall into

- Faster iteration — The more often you review your interviews, the faster you’ll spot opportunities to improve the next interview, and the more effective your process will be

A step-by-step guide to user interview debrief questions

To run an effective research debrief for a user interview, follow these four steps:

Step 1: Synthesize interesting observations

The first mistake I see people making when doing user interviews is not summarizing their findings properly.

Anyone can add a recording or an extensive notes file into a shared drive, but people won’t have time to watch or read it. And if they don’t, you’ll be the only beneficiary of the interview. That is, until you forget.

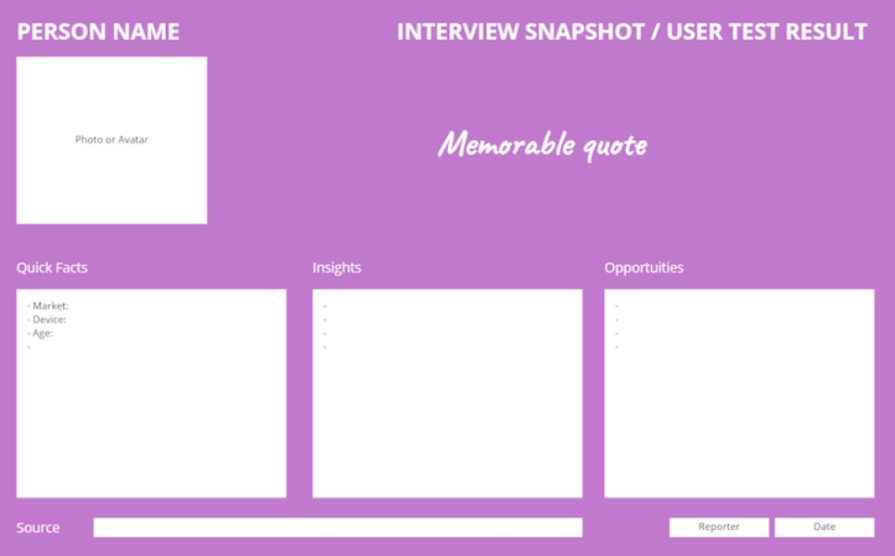

To avoid that waste, I prefer to spend time curating and summarizing the most important insights in a digestible, ideally one-minute read summary using a user interview snapshot like this:

Your user interview snapshot template should consist of elements like:

- User name and photo (to quickly recall the interview)

- Essential facts (to frame the context)

- Key insights (the main lessons learned)

- Opportunities (next steps based on the interview)

- Memorable quote (if you were to remember one thing from the interview)

- Admin data (date, interviewer, links, etc., so that it’s easier to follow up if needed)

This way, anyone in the company — and you, yourself — can quickly grasp the most important insights from the interview without spending too much time or energy.

Step 2: Dig deeper

Once I’ve captured the key learnings and have everyone on the team review them, I spend some time discussing them and trying to dig deeper. At this point in the process, I also review past user interview snapshots to see if there are any meaningful patterns.

Some high-level debrief questions I ask include:

- How do captured insights compare to previous interviews? Are there any commonalities between users sharing the same insights? Is there a dedicated user segment worth treating separately?

- Were there any game-changing insights that might be worth digging deeper into in further interviews?

- Did any learning contradict our key beliefs? If yes, how can we validate it further?

Usually, just ten minutes of unstructured discussion within the team is enough.

In the best-case scenario, you’ll build on top of each others’ observations and get more meaning from the interview. In the worst-case scenario, you’ll get a healthy dose of alignment and decrease the chances of forgetting the learnings from the debrief questions dramatically.

Step 3: Update key artifacts

Your research efforts should include capturing and synthesizing learnings from individual user interviews and gaining a more high-level understanding of your users.

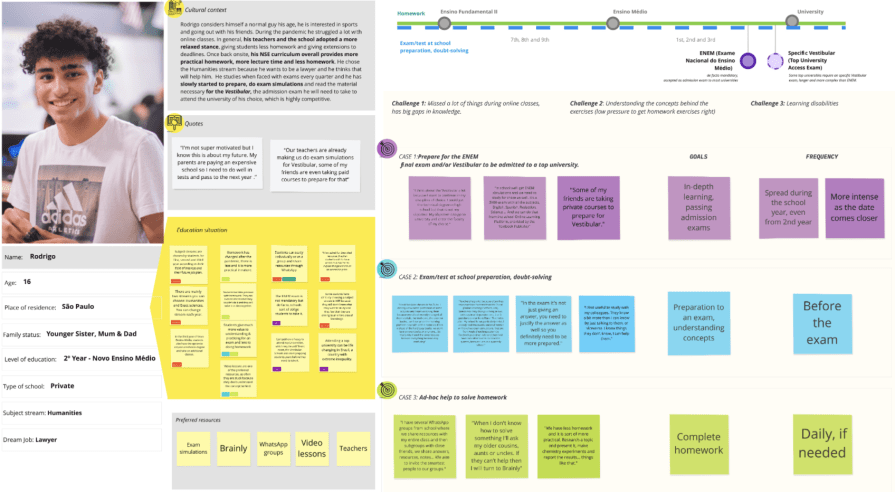

There are many UX artifacts and tools that accommodate that, although my two go-to solutions are user personas (one per each significant segment) and user journey maps (one per user persona).

Start by understanding which of your existing personas best represents the interviewee. Then, consider whether your most recent learnings are significant enough to update your main user persona.

And it’s the same story for the user journey.

After identifying what persona best represents the interviewee, I try to understand whether I learned anything new about their journey within the product and update it accordingly:

You could also use empathy maps, opportunity solution trees, or any other tool to capture information from the debrief questions about your users. The goal here is to ensure that all your learning from individual interviews adds up to a bigger picture.

Step 4: Plan the next actions

The last step in the process is to decide whether you should take any new actions based on what you have learned from previous steps. You have three options:

Option A: Do nothing

If you are still discovering new insights, the interviews are going quite smoothly, and you are ready to process more learning, just keep following your regular interview schedule.

If it’s not broken, don’t fix it.

Option B: Adjust

If you learned something critical or noticed that interviews aren’t as efficient as you’d like them to be, you might need to adjust your future interviews by three means:

- Adjust questions — Remove repetitive questions or add new ones to explore fresh insights

- Adjust participants — It might happen that from three of your key user personas, you are talking primarily to one of them. If you’re only interviewing a narrow user group, broaden your pool to ensure a diverse sample and ensure you don’t over-index a specific sub-segment (unless you conduct very focused research)

- Adjust logistics — If you are often running out of time or have too much spare time left, adjust the length of the interview. If the attendance rate is lower/higher than expected, adjust the incentive size. If you see a potential to invite additional observers, or if it’s already crowded, change the number of participants. And if there are technical difficulties, consider changing the platform

Option C: Stop interviews

Sometimes, it might make sense to stop the interviewing process altogether. Usually, it happens for one of three reasons:

- Game-changing insight — If you learn something that completely changes your beliefs, you might want to take a break and redirect your research to validate that insight

- Depletion of learnings — If you notice that insights and learning mostly repeat, you’ve reached the point of diminishing returns, and it makes sense to stop the interview cycle

- Grossly ineffective interviews — In some rare cases, small adjustments between interviews are not enough to fix the problem. If you realize you are asking the wrong people the wrong questions, it might make sense to take a break and start from scratch

Summary

It’s better to run ten interviews with proper debriefs in between than to mindlessly rush fifteen of them.

By now, you know that properly leveraging debrief questions between interviews will help you:

- Iterate on your interview approach faster

- Capture and retain more learnings

- Align the whole team

- Mitigate bias

Start by synthesizing learnings into an easily digestible form, such as an interview snapshot. Then, talk with the whole team and try to dig deeper by comparing outcomes to previous interviews, identifying patterns, and comparing them to other research. Once you understand the interview outcome more deeply, map what you learned from your existing artifacts, such as personas and user journeys. Lastly, decide whether to adjust or terminate further interviews or keep going.

Although investing an extra hour in an already hour-long interview might seem excessively wasteful, skipping the debrief in research can result in missed opportunities and wasted efforts.